Agentica

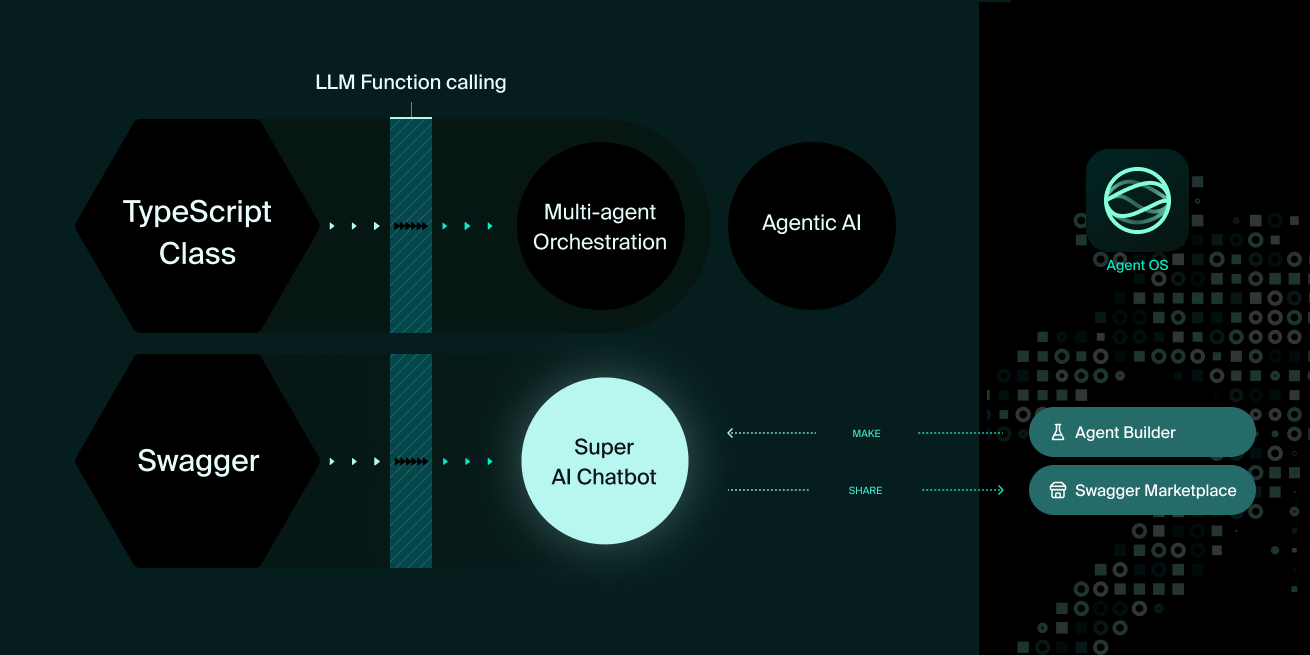

The simplest Agentic AI library, specialized in LLM Function Calling.

Don’t compose complicate agent graph or workflow, but just deliver Swagger/OpenAPI documents or TypeScript class types linearly to the agentica. Then agentica will do everything with the function calling.

Look at the below demonstration, and feel how agentica is easy and powerful. You can let users to search and purchase products only with conversation texts. The backend API and TypeScript class functions would be adequately called in the AI chatbot with LLM function calling.

Pseudo Code

import { Agentica } from "@agentica/core";

import typia from "typia";

const agent = new Agentica({

controllers: [

await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then(r => r.json()),

typia.llm.application<ShoppingCounselor>(),

typia.llm.application<ShoppingPolicy>(),

typia.llm.application<ShoppingSearchRag>(),

],

});

await agent.conversate("I wanna buy MacBook Pro");Setup

$ npx agentica start <directory>

----------------------------------------

Agentica Setup Wizard

----------------------------------------

? Package Manager (use arrow keys)

> npm

pnpm

yarn (berry is not supported)

? Project Type

NodeJS Agent Server

> NestJS Agent Server

React Client Application

Standalone Application

? Embedded Controllers (multi-selectable)

(none)

Google Calendar

Google News

> Github

Reddit

Slack

...You can start @agentica development from boilerplate composition CLI.

If you run npx agentica start <directory> command, the CLI (Command Line Interface) prompt will ask what type of package manager you want to adapt, and which type of project you wanna create. And then it will fill some useful controllers (embedded functions) into the project.

About the project types, there are four options you can choose.

The first NodeJS server is an Agentic AI server built in NodeJS with WebSocket protocol. And the second NestJS server is the same thing with NodeJS server, but composing backend server with NestJS and Nestia . The third React client application is a frontend application built in React connecting to the previous WebSocket server.

When you’ve determined the project type as “NodeJS server” or “NestJS server”, the CLI will ask you to select embedded controllers. The embedded controllers are the pre-built functions you can use in your agent like “Google Calender” or “Github” cases. You can embed multiple pre-built controllers unless you select “Nothing”.

Standalone frontend application.

The last standalone option constructs a frontend application that composing the agent in the frontend environment without any backend server interaction.

It must be not for production, but only for the testing environment. If you distribute the standalone to the production, your LLM API key would be exposed to the public.